SECURE ACCESS TO YOUR NETWORK RESOURCES

ON THE FIRST DAY, WORST DAY AND EVERY DAY

THE NETWORK RESILIENCE PLATFORM

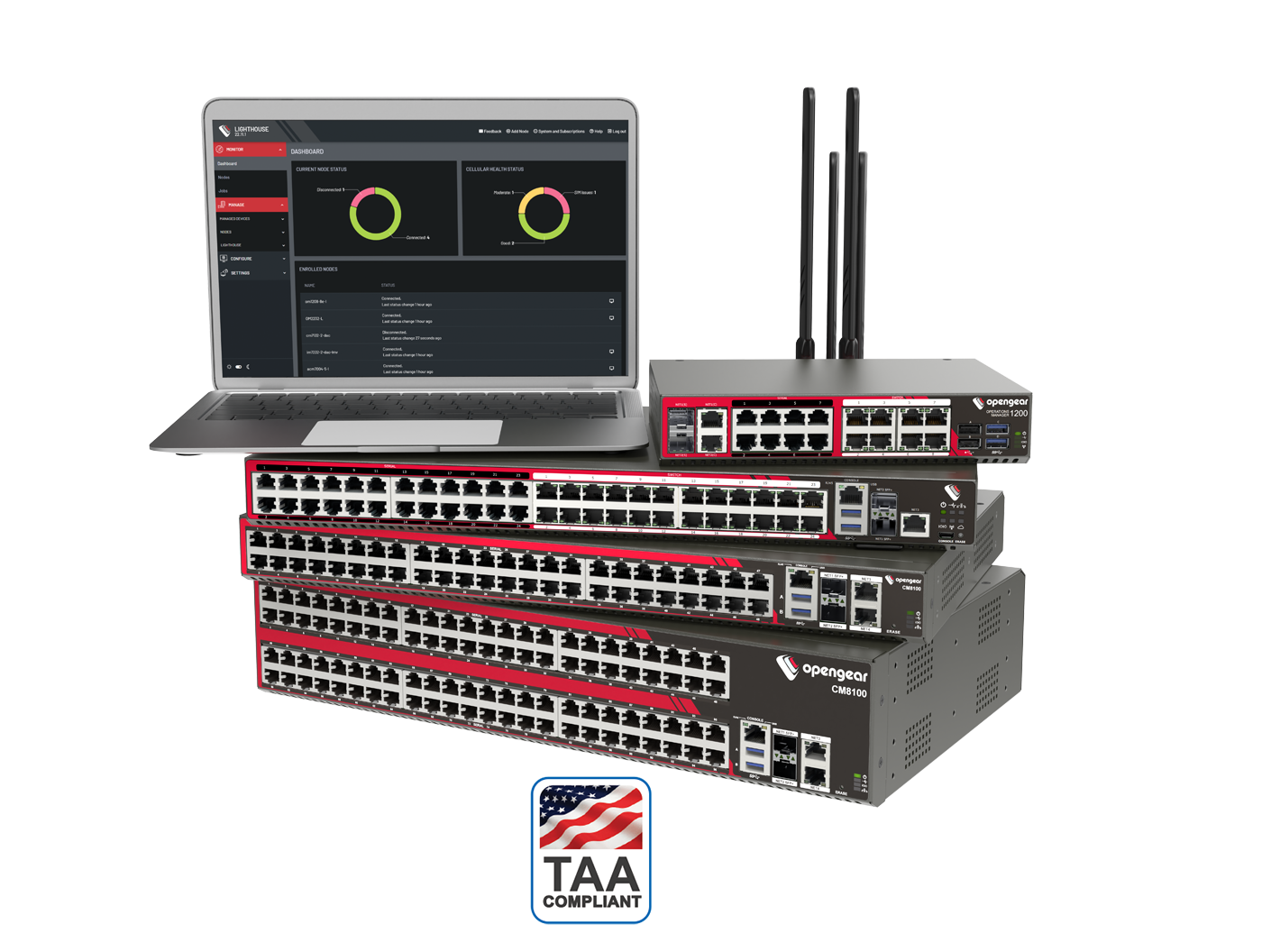

Lighthouse Software

Providing access to all your network resources. Lighthouse Software offers remote IP access and multi-instance monitoring of your critical resources from a single portal.

OM1200 Operations Manager

The OM2200 combines the capabilities of a Smart OOB™ with the flexibility of automation in a compact console server. 4-8 serial ports with a 4-8 port GbE switch, x86 CPU and TPM2.0 chip for secure deployment and automation at edge locations

OM2200 Operations Manager

The OM2200 combines the capabilities of a Smart OOB™ Console Server with the flexibility of Automation. 16-48 software selectable serial ports and 4G global cellular access, x86 CPU for use with standard Docker, Ansible and Python tools

CM8100

The CM8100 product family for data centers and enterprise networks is available in 1GbE and 10GbE models. The CM8100 Console Manager provides up to 96* serial ports, enterprise-grade security using TPM 2.0 module, and independent monitoring using dual power supplies.

*CM8100 10G models

\\ RESOURCES

\\ LATEST POSTS

Enhance Your Network Infrastructure with Opengear’s Extended Warranty Program

In today's interconnected world, network resilience is paramount for the success of any organization.…

Best Practices for Mastering Network Resilience

In today's digital world, the resilience of your network is a critical factor in…