The third installment in the Road to Resilience webinar series covered why network resilience is a necessity when maintaining uptime in the Data Center. The discussion was hosted by Roy Chua, Principal at AvidThink, and featured Mark Harris, SVP of Marketing at The Uptime Institute and Ryan Hogg, Senior Product Manager at Opengear.

Multi-layer Approach to Uptime

The first topic discussed was how network resilience depends upon more than just the blinking lights on boxes in the data center. Harris explained that you need to take a multi-layer approach to evaluating risk to uptime. Everything under the business services umbrella is part of the risk equation. The Uptime Institute also looks at everything below the box and everything above the box. Opengear appliances live in the middle layer and are focused on performance in this IT equipment layer. Hogg points out that in this middle layer, “Opengear provides a point of presence in the data center so if you don’t have a physical person hands-on, you do have that ability to log in remotely and be able to access the equipment.”

Harris said, “You have to take all three of those layers and essentially have to multiply that risk. You have to consider the availability at the top layer where you’re doing a logical movement of workloads, and then multiply that by the ability for the middle layer, the boxes, to do work, and then multiply that by the ability for the platform itself to do work. The multiplication of those three layers is the actual risk factor for business service delivery.” Harris helps his customers state in business terms what results they desire. You need to be able to specify something like, “I desire the data center to be able to operate under these conditions with this kind of performance.”

It’s the ability to calculate the risks in all three of those layers that tells you if can you resiliently deliver business services.

Evaluating Risk and Creating Priorities

At a high level, Harris helps his customers clarify their needs. They should be able to state, “I need this set of applications all the time and the value to me is much higher than this other set of applications.” He works with customers on which level of tier they want to implement and they literally pull the plug on data centers as part of the evaluation involved in the certification process.

Harris offered a quick litmus test for more specific middle layer risk evaluating. He said, “Ask the technologists in your organization which business applications are being serviced by the boxes that they maintain. If they don’t know that answer, they probably haven’t done a good enough job connecting those dots. The person dealing with the boxes should absolutely know what business service is affected by the uptime of that box.”

Over time, the information about what’s going on in a network changes. If it’s not continually documented, you probably have a higher risk profile than your business expects. If you’re afraid to touch what’s in your data center because you don’t know what’s in there, that’s a red flag.

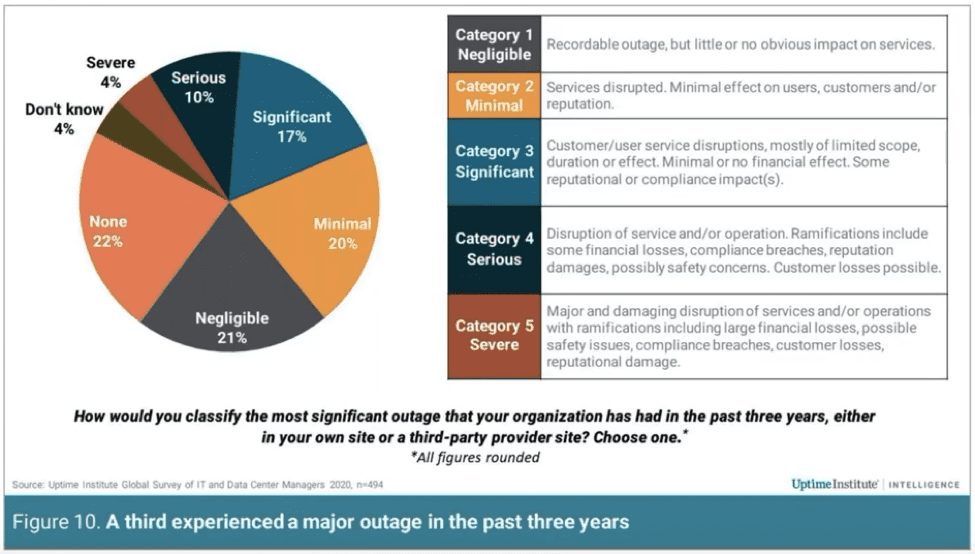

Network Failure Trends

The conversation shifted to a discussion of what trends in network failures have been in recent years. I was surprised to learn that the number of publicly reported outages is increasing, the duration is increasing and the cost to customers is increasing across the board. Hogg said, “With less access to the data center due to travel restrictions and health restrictions there’s less appetite to do resiliency testing.” Harris pointed out that today, companies often have redundancy built in, but that’s not enough.

In the past when a data center failed, things just stopped. These days other systems take over, but it raises your risk profile because the second failure has a much higher likelihood of occurring than the first failure. He added, “Also, your capacity goes down. Although you may have designed something to handle a million transactions a minutewhen failures happen and things reconfigure and workloads get migrated and boxes get rebooted you’re now at 900,000 transactions per minute. How well is your business going to operate when you need a million transactions but are running at 900,000?”

Looking Ahead

For years there’s been a push to the cloud, but a recent survey by The Uptime Institute showed that it’s growing very slowly. Harris said that for many years more than half of all work being done in business is going to continue to happen in non-cloud environments. He said, “You can’t just write a check to get out of the issue of having to run good management and good IT.”

Movement to the edge is increasing more rapidly. Harris reported that, “More than half of our survey respondents said that the edges are going to be part of their game plan moving forward.”

Hogg discussed the challenges of the edge and how Opengear addresses them. There’s a lot of risk to IT equipment, such as atmosphere changes in temperature, corrosives from diesel generators, or dust. All of that impacts the lifetime and the reliability of equipment at the edge. He added, “Being able to not only reach the equipment when it’s operating on site but deploying equipment at multiple sites requires a lot of travel. Opengear lets you do secure zero-day provisioning without requiring skilled hands on-site.” A contractor with a pallet of equipment can set it up, plug it in, and have it brought up remotely.

Watch the Full Webinar with The Uptime Institute Now